13 Mins

13 Mins

Share Article

Subscribe and get fresh content delivered right to your inbox

10 Mins

AI in design has evolved from a productivity tool into a creative collaborator. This article explores how designers use machine learning and generative tools to automate tasks, accelerate prototyping, personalize experiences, and enhance every stage of the design process. It also examines the skills required, the challenges teams face, and why human judgment remains central as AI reshapes modern creative workflows.

Continue Reading

13 Mins

The experiment phase is over. By 2026, enterprise AI shifts from passive generation to autonomous, governed, and measurable operations, and the window to prepare is narrowing.

Continue Reading

9 Mins

Thinking about transitioning from Data Engineer to AI Engineer? Understand the key differences first. Data engineers build ETL pipelines and data infrastructure using Airflow, Spark, and Snowflake. AI engineers deploy ML models and create intelligent applications using PyTorch, TensorFlow, and LangChain. This comprehensive 2026 guide compares roles, skills, required tools, and provides a clear transition roadmap for your career.

Continue Reading

Subscribe and get fresh content delivered right to your inbox

Large language models (LLMs), with their comprehensive solutions and expanded capabilities, have revolutionized the field of natural language processing (NLP). These models, trained on enormous text datasets using transformer-based architectures such as BERT, GPT, or T5, may perform a variety of tasks such as text production, translation, summarization, and question answering.

However, while LLMs are effective tools, they are frequently incompatible with specific activities or domains due to their generalized training on broad data corpora.

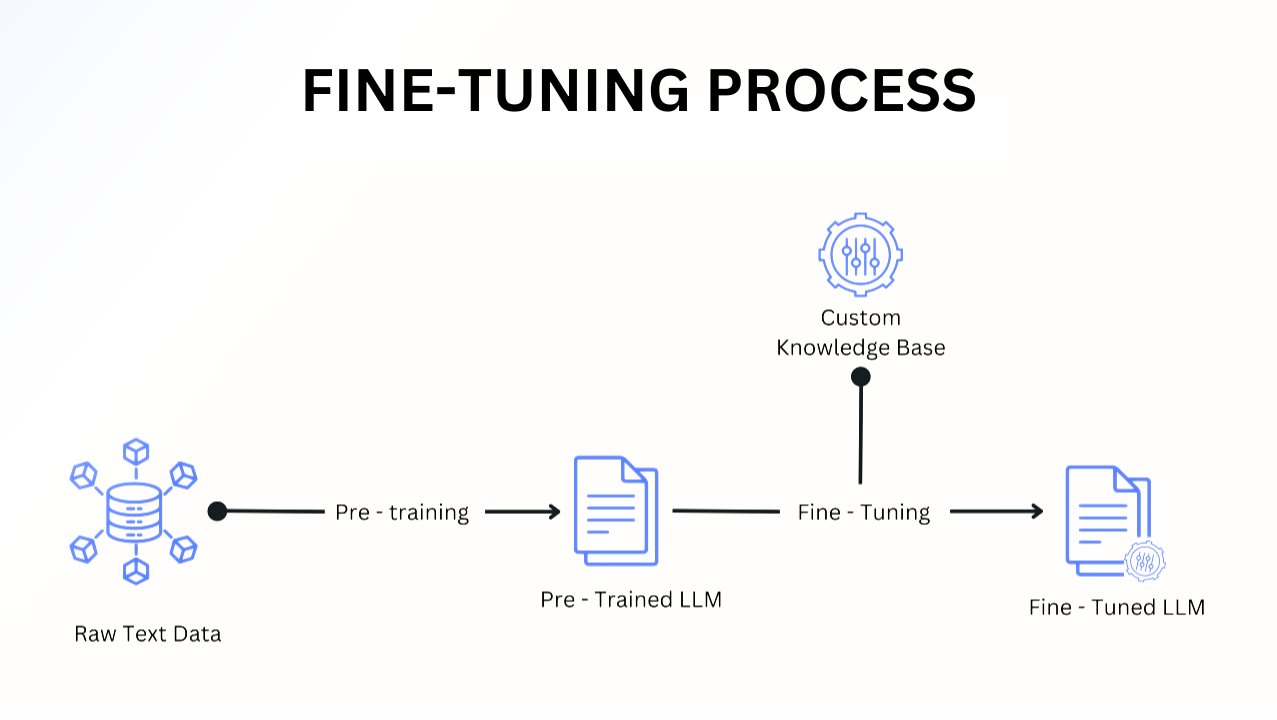

Through the LLM fine-tuning process, users can modify pre-trained LLMs to focus on specific downstream tasks. They can use techniques such as gradient descent optimization and backpropagation on labeled data. You can increase a model’s performance on a given task while retaining its across-the-board language knowledge by fine-tuning it on a carefully curated small dataset of task-specific data.

For instance, a Google study discovered that a pre-trained LLM’s accuracy increased by 10% when it was fine-tuned for sentiment analysis. In this blog, we examine what are fine-tuned LLMs and how it can lead to more accurate and context-specific outcomes, lower training costs, and a significant improvement in model performance.

LLMs are a subset of foundation models, which are general-purpose machine learning models capable of solving a wide range of tasks using large-scale neural networks. Fine-tuned LLMs are models that have undergone additional training using task-specific objectives, which increases their usefulness for particular tasks and industries, like software development.

Despite their exceptional versatility, LLMs may perform ineffectively on highly specific tasks requiring domain expertise since they are trained on linguistic data and possess knowledge of syntax, semantics, and context but lack focus on niche domains.

For various applications, the base LLM can be fine-tuned with smaller labeled datasets, focusing on specific domains. Fine-tuning utilizes supervised learning, a technique where models are trained using gradient-based optimization algorithms such as AdamW to minimize task-specific loss functions like cross-entropy or mean squared error. These prompt-response pairs help the model understand relationships between inputs and outputs, allowing it to generalize on previously unseen data within the domain.

How Does the Data Labeling Process Help in Fine Tuning?  The annotations necessary for fine-tuning are instruction-anticipated response pairs, with each input corresponding to an expected output. While picking and categorizing data in a data labeling process may appear to be a simple operation, various factors contribute to its complexity.

The annotations necessary for fine-tuning are instruction-anticipated response pairs, with each input corresponding to an expected output. While picking and categorizing data in a data labeling process may appear to be a simple operation, various factors contribute to its complexity.

The annotation process becomes difficult because text data might be subjective. A set of standard practices for data labeling can help with many of these issues. Make sure you fully comprehend the issue you are trying to solve before you begin. You will be more capable of producing a dataset that includes all edge situations and variants if you have more information.

When selecting annotators, your vetting procedure should be as comprehensive. Data labeling techniques involve a process that requires a great deal of attention to detail, reasoning, and insight. These strategies are quite helpful to the annotating process.

The following are some techniques for NLP and LLM data labeling you can take to guarantee a successful fine-tuning procedure.

Starting with a smaller model makes fine-tuning easier. Models like DistilBERT or ALBERT enable quicker testing and iteration because they use less memory and processing power. This strategy is especially useful when resources are limited. Once the process has been refined on a smaller scale, the lessons learned can be used to fine-tune larger models.

Experimenting with different data types can greatly improve the effectiveness of fine-tuning. Models can learn to handle a greater range of real-world events by accepting a variety of input formats, including structured data (e.g., CSV files), unstructured text (e.g., logs), images, or even multi-modal data. This diversity ensures robust embeddings and contextualized outputs.

To ensure that the model learns the appropriate patterns and nuances, the dataset should be representative of the job and domain. Techniques like stratified sampling, adversarial testing, and domain-driven dataset augmentation help ensure robustness. High-quality data reduces noise and mistakes, allowing the model to produce more precise and consistent results.

Hyperparameter tuning is critical for improving the performance of finely tuned models. Key parameters such as learning rate, batch size, dropout rates, gradient clipping thresholds, and epoch count must be adjusted to strike a balance between learning efficiency and overfitting prevention. Tools like Optuna or Ray Tune can automate hyperparameter optimization.

There are various ways and approaches for fine-tuning model parameters to meet a specific demand. These LLM fine-tuning methods can be broadly divided into two groups: supervised fine-tuning and reinforcement learning from human feedback (RLHF).

The model is trained using this method on a task-specific labeled dataset, with each input data point assigned a right answer or label. The model learns to change its parameters to predict these labels appropriately. This procedure directs the model to adapt its prior knowledge, achieved from pre-training on a huge dataset, to the specific job at hand.

The most widely used supervised fine-tuning approaches are:

Reinforcement learning from human feedback (RLHF) uses interactions with human feedback to train language models. RLHF helps to continuously improve language models so they generate more accurate and contextually relevant responses by integrating human feedback into the learning process.

The most commonly used RLHF procedures are:

While fine-tuning can enormously improve the performance of LLMs for specific tasks, evaluating the effectiveness of the fine-tuning process is vital to make sure that the model performs as expected. Without proper evaluation metrics and validation techniques, models might overfit or fail to generalize on unseen data. This section delivers an overview of key evaluation methods and tools for assessing fine-tuned LLM performance.

Here are some key metrics that can be monitored to measure the performance of fine-tuned performance:

1. Task-Specific Metrics:

2. Generalization Metrics:

3. Robustness Metrics:

Here are validation techniques that one can use:

Here are the list of tools that can be used for validation:

Fine-tuned LLMs often face concept drift in dynamic domains where data distributions evolve over time. Setting up monitoring pipelines using tools like MLflow or Prometheus can track performance and retrain the model when necessary.

Fine-tuned LLMs have already demonstrated remarkable promise, with tools and platforms for LLM data labeling such as MedLM and CoCounsel employed professionally in specialized applications on a daily basis. An LLM tailored to a certain domain can be a very powerful and valuable tool, but only if it is fine-tuned using relevant and reliable training data.

Automated solutions, such as employing an LLM for data labeling, can speed up the process, but creating and annotating an excellent training dataset demands human expertise.

Hiring remote LLM fine-tuning experts can help you improve the accuracy and efficacy of your data labeling process. However, hiring a remote LLM expert can be demanding and time-consuming. Hyqoo can help you streamline this process with AI.

Our AI Talent Cloud analyzes your specific requirements and preferences to recommend the most qualified professionals for your open positions. Explore our website to connect with Hyqoo specialists and effortlessly onboard remote LLM experts.

A key step in training large language models (LLMs) is data labeling, which involves annotating the training data the model uses to gain context. To help the model train more efficiently, the data is labeled with information like categories, relationships, or sentiment.

Following the collection of the training data, the data labeling procedure starts. Using a tool such as SuperAnnotate or Supervisely, human annotators label data points. To make things more efficient, a lot of technologies now allow automated pre-labeling. A QA procedure and a thorough set of guidelines that are updated on a regular basis should be put in place to guarantee quality.

Teams of individuals often produce and review the annotations in the LLM data labeling and annotation process. Although human participation is necessary to assure accuracy, AI-assisted prelabeling can generate labels and annotations more efficiently. Data labeling software, such Label Studio or Labelbox, is commonly used for data labeling.