8 Mins

8 Mins

Share Article

Subscribe and get fresh content delivered right to your inbox

13 Mins

The experiment phase is over. By 2026, enterprise AI shifts from passive generation to autonomous, governed, and measurable operations, and the window to prepare is narrowing.

Continue Reading

9 Mins

Thinking about transitioning from Data Engineer to AI Engineer? Understand the key differences first. Data engineers build ETL pipelines and data infrastructure using Airflow, Spark, and Snowflake. AI engineers deploy ML models and create intelligent applications using PyTorch, TensorFlow, and LangChain. This comprehensive 2026 guide compares roles, skills, required tools, and provides a clear transition roadmap for your career.

Continue Reading

7 Mins

This in-depth guide explores AI-first development and what it means for software teams in 2026. It explains how AI-first software development reshapes system architecture, developer responsibilities, team structures, and delivery workflows. The article covers why organizations are adopting AI-first strategies, the growing importance of hiring AI experts, and how prompt engineering for developers fits into modern engineering practices. It also addresses real-world trade-offs, governance challenges, and future considerations helping founders, developers, and engineering leaders understand how to design, build, and scale software in an AI-first world.

Continue Reading

Subscribe and get fresh content delivered right to your inbox

Large Language Models, or LLMs, help developers handle common problems in many phases of software development and drastically decrease manual efforts. In addition, LLMs streamline processes, increase precision, lessen the need for manual documentation, and foster better teamwork across the software development lifecycle.

In this article, we’ll examine how LLMs change the style of writing code and how they could help engineers automate tasks in the software development lifecycle.

Here are the steps for integrating the LLMs into your software development workflow:

Start by identifying areas in your workflow where LLMs can add value. In software development workflows, LLMs can be integrated into IDEs to generate code snippets, like GitHub Copilot. LLMs can also be used to automatically generate documentation, summarize reports, or create user manuals based on system behavior or software code. LLMs can help in generating texts, so you can find a suitable application where you can use LLM instead of a programmer or expert.

Based on the use case, you must choose the LLM model to integrate and apply it accordingly. Consider factors like text completion, translation, answering questions, and summarization. Evaluate different models based on their performance metrics, scalability, and cost. A well-suite model provides the best combination of functionality and resource requirements.

After selecting your model, you can train it according to your application on the relevant datasets. This involves including the model’s domain-specific data and improving its correctness and relevance. Fine-tuning helps in ensuring that it delivers correct and contextually relevant outputs, thereby improving its performance.

Integrate the LLM seamlessly into your current software systems and workflows. This may entail building new apps that take advantage of the model’s features, utilizing APIs, or designing unique user interfaces. By ensuring that integration procedures are streamlined and compatible with your present infrastructure, you can achieve faster adoption and less disruption.

Gather user input, monitor the model’s functionality, and make iterative changes to create systems for ongoing feedback and development. This method will help the LLM adapt to your workflow requirements and stay highly effective and relevant over time. It will also help identify areas for improvement.

Implement strong data protection procedures in place to handle security, privacy, and compliance issues to ensure that the use of LLMs fits with organizational policy and industry requirements. Regular audits and secure data handling procedures will support the preservation of stakeholder trust and the protection of sensitive data.

LLMs can contribute to the various stages of the software development lifecycle; here is a detailed explanation:

With LLMs, the requirement engineers can access a wealth of data, such as market trends, customer reviews, and best practices for the sector. By leveraging the LLM, the engineers may generate user stories, product descriptions, etc. In addition, LLMs can validate the requirement documents to ensure they are error-free, consistent, and free from contradictions.

Design is the phase where creativity plays a key role. LLMs may greatly aid in this by assisting in the creation of useful design models and user interfaces. They offer innovative design features, appropriate architectural patterns/styles, and strategies and even anticipate possible usability problems by examining patterns and trends.

To increase productivity, LLMs such as GPT-4 and tools like GitHub Copilot automate tedious jobs and fix programming problems. LLMs (for instance, Llama Code) are trained on vast datasets, including code repositories, technical forums, coding platforms, documentation, and web data relevant to programming. This extensive training allows them to generate code by understanding the context of code, comments, function names, and variable names.

These models are highly efficient in producing the test cases by utilizing the input of user requirements. For instance, OpenAI’s GPT models can generate test cases, scripts, and even security audits. LLMs greatly improve test coverage and application resilience by spotting minor edge cases and possible security flaws that conventional approaches can miss.

Software project documentation may be produced with LLMs just as effectively as other software development tasks. Based on resources like source code and system specifications, the models can produce documentation quickly. In addition to generating code documentation, API reference material, user manuals, and developer guides, developers may prompt the models.

Software developers may need to take a calculated approach while utilizing LLM tools. Let’s examine some of the key recommendations for using AI effectively.

LLMs can effectively respond to developers’ queries when given the correct prompts. Developers must learn to write effective prompts to utilize them to build, test, deploy, and manage complex software solutions. To get the best results, follow the practice below.

Write what you require clearly and provide details clearly and specifically so that it can respond correctly. For example, “Generate a code for sorting” is wrong. It should be “Write a Java function that sorts an array of integers in ascending order using the quick sort algorithm.”

Always mention the right task you want. This ensures that the output generated is correct.

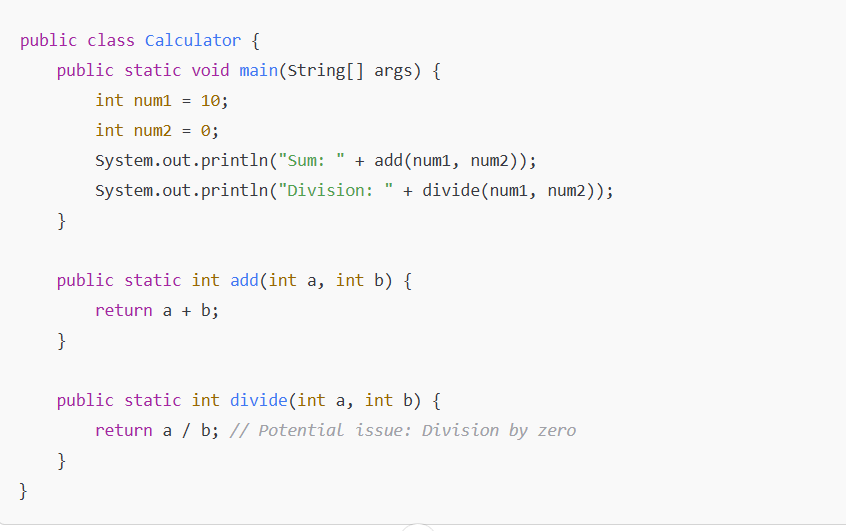

For example, prompt: “Check if there’s anything wrong with this code.” The accurate is to write as “Scan the following Java code for potential issues such as performance bottlenecks, unhandled exceptions, or logical errors. Here’s the code:

Try to write the prompts in active voice, as this gives clear instructions to AI and generates quick, relevant output. For example, a prompt “The code should be checked for errors.” can be written as “Check this code for errors and suggest fixes.”

Using examples in the prompt can increase the relevance and the quality of the output in AI response. For example, a prompt written as “Explain recursion.” can be reframed by using an example. The correct will be “Explain recursion in Python with an example of a function that calculates the factorial of a number.”

LLMs are changing the way developers write code and work together on projects, from ethical issues to real-time help and producing code in natural language. LLMs can concentrate on more complex tasks and make strategic decisions because of their automated capabilities. Integrating LLMs with your software development lifecycle can facilitate automated tasks, save time, and deliver higher precision.

If you are looking to hire an AI integration specialist to streamline your software development process, you can check out Hyqoo. We have a global pool in our AI Talent Cloud, and the AI-powered platform helps to shortlist skilled talent within minutes who perfectly match your requirements. By streamlining the hiring process, you can cut your cost per hire by as much as 40% and close the vacancy within 2-3 days. Connect with the Hyqoo Experts today!

Large language models, or LLMs, are generative AI models that can understand input and produce output in human language that corresponds to that understanding.

LLMs can automate various tasks across different phases of the Software Development Lifecycle. Engineers can leverage vast datasets, market insights, and other information to create design models and user interfaces. Additionally, LLMs enable automated code generation and assist in creating test cases, streamlining the entire development process.

Integrating LLMs into the workflow requires careful consideration of identifying the use case, selecting the right model, training and fine-tuning the model, and integrating it with the system.