Selecting the Right Framework for Effective Model Evaluation

Model evaluation has evolved as an important method for improving machine learning models, including LLMs (Large Language Models), performance, and ROI. It involves applying robust metrics, advanced methodologies, and tailored frameworks to ensure models deliver optimal results in real-world applications.

Model evaluation can significantly impact a model’s performance by methodically identifying inefficiencies in data preprocessing, algorithm selection, and hyperparameter tuning. The ML model evaluation framework reveals growth opportunities through feature engineering and model architecture improvements and offers predictive analytics using data trends and anomaly detection tools. This keeps the model on track with its original purpose and improves efficacy, scalability, and reliability.

However, a one-size-fits-all method of model evaluation is inefficient. Evaluations must take into account diverse applications, context-specific performance metrics, and operational adaptability. They should also assess computational scalability, ethical considerations like bias mitigation, and real-world applicability.

Adapting model evaluation to your business requirements guarantees that you will get the most out of your ML model evaluation framework and maintain its accuracy, efficiency, and dependability under dynamic conditions.

This guide offers an in-depth overview of the machine learning model evaluation framework. It emphasizes on technical metrics like log loss, Matthews correlation coefficient (MCC), and model calibration techniques, alongside standard best practices, to assist you in selecting and maintaining the models that best meet your requirements.

What is Model Evaluation?

Model evaluation is a critical stage in the field of Machine Learning (ML) that determines how well a trained model works with new data. It combines quantitative metrics, diagnostic visualizations, and robustness testing to assess both static and dynamic performance.

ML and LLM evaluation frameworks involve evaluating the general accuracy, precision, recall, and dependability of a model’s predictions. By assessing a model, we can learn more about its generalization capabilities, edge case performance, and robustness to out-of-distribution data to decide whether it is appropriate for practical uses.

Model evaluation methods assess a trained model’s effectiveness and efficiency. These techniques allow us to examine the model’s capacity to generalize, handle noisy datasets, and create accurate predictions on previously unseen data using advanced evaluation protocols such as k-fold cross-validation and stratified sampling.

However, understanding a model’s strengths and shortcomings allows us to iteratively adjust hyperparameters, explore alternative loss functions, and improve its deployment performance.

Key Metrics for Model Evaluation

To evaluate a machine learning model, several metrics and methodologies are used. These metrics provide a multidimensional understanding of model performance and encompasses both predictive accuracy and operational reliability. The following classification of metrics is important for ensuring your machine-learning models remain reliable, interpretable, and adaptive to diverse tasks:

- Accuracy: It is a commonly used metric that represents the percentage of true predictions made by the model. While intuitive, accuracy is less informative for imbalanced datasets and requires complementary metrics for a holistic view.

- Precision: It measures the model’s true positive predictions relative to all of its positive predictions. It is crucial in applications like fraud detection and medical diagnostics where false positives carry significant costs.

- Recall: Determines the proportion of real positive predictions among all real positive events in the dataset. This is especially useful in scenarios where missing true positives could lead to critical failures.

- F1 Score: Offers an accurate evaluation of a model’s performance by displaying the harmonic mean of precision and recall. It balances precision and recall which makes it ideal for datasets with uneven class distributions.

- Confusion Matrix: Displays classification results in a tabular format. It allows us to calculate several evaluation criteria. It also provides insights into false positives, false negatives, and overall misclassification patterns.

- BLEU/ROUGE scores: Used to assess the quality of generated text in comparison to reference texts. These metrics evaluate natural language processing tasks like text summarization and machine translation.

- Receiver Operating Characteristic (ROC) Curve: Shows the model’s performance graphically by plotting the true positive rate against the false positive rate. It enables threshold optimization for classification tasks.

- Area Under the Curve (AUC): Measures the overall accuracy of a classification model using the ROC curve. A higher AUC indicates better discrimination between classes.

Top 4 Model Evaluation Methods

Model evaluation methods are the foundation for fine-tuning machine learning models. They promise robust performance under diverse conditions. Below are the top four techniques widely used to redefine data-driven decision-making and improve scalability in business applications:

- Holdout Method

This model evaluation technique divides your dataset into training and testing sets, often in a fixed ratio such as 70:30 or 80:20. For example, an enterprise SaaS company may train an ML model that predicts churn using 70% of its user data and verify the accuracy of the model using the remaining 30%. This method is computationally efficient and suited for quick validation but may suffer from data variance issues depending on the split.

- Cross-Validation

Cross-validation splits the dataset several times, providing distinct training and test sets, as opposed to depending on a single train-test split. For instance, a B2B e-commerce platform trying to enhance its recommendation system could use k-fold cross-validation, where the dataset is split into k subsets, and the model is trained and validated k times, each time using a different subset for validation. This repeated procedure reduces overfitting risks and ensures consistent performance. It generates confidence in the model’s adaptability to unseen data.

- Bootstrapping

Bootstrapping is a statistical approach that uses replacement sampling to enable repeated evaluations of different data samples. For example, a supply chain management system may employ bootstrapping to repeatedly test shipping data, simulating variations in real-world conditions. This model evaluation method is ideal for estimating confidence intervals. It helps in assessing model stability, and identifying anomalies, ensuring machine learning predictions remain reliable.

- Ensemble Methods

When a single machine-learning model fails to achieve the required results, ensemble approaches such as bagging, boosting, or stacking leverage several models to create more accurate and reliable predictions. For example, a B2B banking firm monitoring credit risks might combine models like Random Forests and Gradient Boosting Machines to improve risk assessment. By aggregating multiple models, ensemble methods provide robustness, reduce variance, and handle complex business realities effortlessly.

Tools and Technologies for Model Evaluation

Practical implementation of model evaluation benefits greatly from the use of tools and platforms. LLM evaluation tools streamline processes, automate calculations, and provide actionable insights.

Key Tools:

- Scikit-learn: Presents a variety of pre-built metrics like accuracy, precision, recall, and tools like cross_val_scorefor cross-validation.

- TensorBoard: Visualizes metrics like loss and accuracy during training and evaluation phases.

- MLflow: Tracks experiments, organizes metrics, and manages model lifecycle. It further enables reproducibility in model evaluation.

- SHAP (SHapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations): Provide interpretability by highlighting which features impact predictions most and important for explaining high-stakes predictions in areas like healthcare and finance.

- Google’s What-If Tool: Helps users to probe models interactively by modifying inputs and observing changes in predictions.

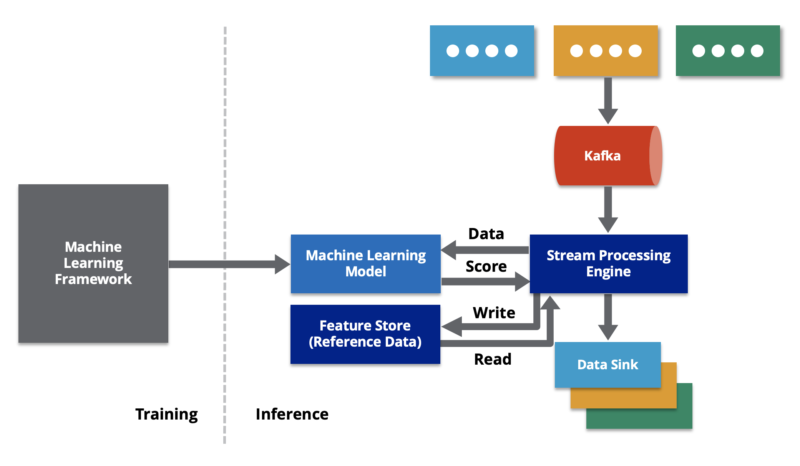

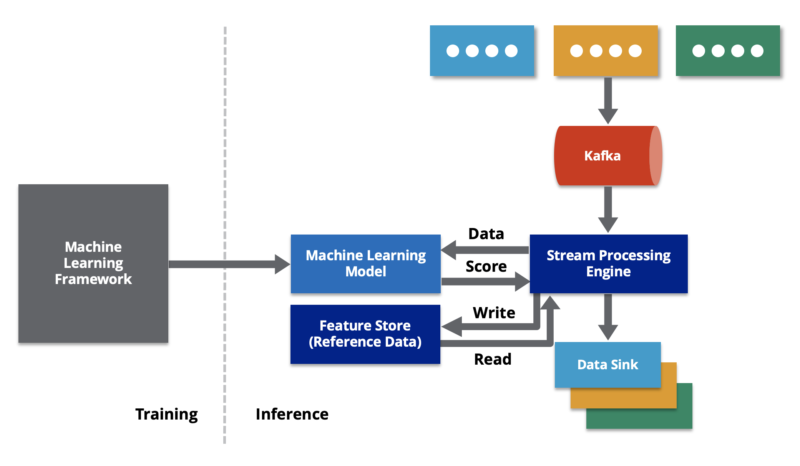

Integration with MLOps:

- Integrating evaluation tools into production pipelines with Kubeflow or Azure ML ensures continuous monitoring and retraining.

Advanced Tools:

- Alibi Explain for advanced model diagnostics.

- H2O.ai for distributed model evaluation on large datasets.

Advanced Techniques for Evaluating LLMs

Assessing Large Language Models (LLMs) requires tailored methods that go beyond traditional metrics. LLMs face unique challenges such as context retention, hallucination, and alignment with real-world knowledge.

Key Metrics:

- Perplexity: Calculates how well a model predicts a sequence of words. This LLM evaluation technique is useful for assessing language generation.

- Knowledge Grounding: Ensures outputs are factually accurate by comparing generated text against trusted sources.

- Hallucination Detection:

- LLM evaluation tools like HELENA identify instances where the model generates nonsensical or incorrect information.

- Example: Assessing GPT-style models for applications in customer support involves benchmarking against FAQ databases.

Ethical Considerations:

- Bias audits using frameworks like AI Fairness 360 or Google’s Responsible AI practices.

- Testing for multilingual performance using metrics like BLEU and ROUGE across diverse languages.

Best Practices and Evaluation Use Cases

Continuous refinement and fine-tuning are required to sustain and improve the performance of LLMs throughout time. Here’s how to keep your machine learning models on top of their game:

- Broader Language Testing: Depending on your organization’s infrastructure, expand evaluations beyond Python to include programming languages such as R, Julia, or Java. This ensures adaptability and cross-language functionality.

- Continuous Improvement: Regularly update and test models using techniques like data augmentation, transfer learning, and adversarial testing. This iterative process proactively identifies weaknesses and resolves them.

- Constant Monitoring: Use tools like MLflow, SageMaker, or TensorBoard to monitor performance metrics in real-time and retrain models based on new datasets or changing scenarios.

- Feedback Loops: Incorporate user-generated feedback via APIs or automated workflows to align model outputs with end-user expectations, improving satisfaction and relevance.

- Performance Tracking: Implement reliable monitoring tools such as Grafana or Prometheus to track model performance across varied scenarios and identify areas for refinement.

- Prompt Optimization: Use attention mechanisms, hyperparameter tuning, and error propagation analysis to improve your LLM capabilities continuously. These LLM evaluation techniques ensure enhanced reliability over time.

- Regular Benchmarking: Compare model performance with human benchmarks or industry-specific standards such as GLUE for NLP models or ImageNet for computer vision tasks. This ensures competitiveness and alignment with industry trends.

Keeping your models updated and fine-tuned are critical LLM evaluation techniques for sustaining peak performance. Here are some methods in which companies use successfully evaluated and iterated models.

- Code Generation: Automate coding activities to increase productivity and concentrate on complicated issue solutions.

- Error Correction: Use feedback-driven methodologies to continuously debug and optimize models, increasing their robustness.

- Cross-Language Development: Use LLMs to translate code between different programming languages, increasing versatility and expanding your models to new domains.

- CI/CD Integration: Automate testing and error repair operations in your CI/CD pipelines. This ensures that your models always function optimally, even as they evolve.

Model Evaluation in ML Challenges

ML model evaluation framework may sometimes face a number of challenges, all of which have practical implications:

- Data Leakage

This occurs when test data unintentionally influences the training process. For example, features derived from the test dataset included in the training set can inflate accuracy scores, leading to over-optimistic results. Techniques like data partitioning and feature engineering audits help mitigate this risk.

- Class Imbalance

This arises when certain classes dominate the dataset, leading to biased models. For example, in medical diagnosis, rare diseases may be underrepresented, resulting in poor predictions. Solutions include oversampling minority classes, undersampling majority classes, or employing techniques like SMOTE (Synthetic Minority Oversampling Technique).

- Overfitting

When a model performs exceptionally well on training data but poorly on unseen data, it indicates overfitting. For example, complex neural networks can memorize patterns from noisy data, reducing generalization. Regularization techniques such as L1/L2 penalties, dropout layers, or early stopping are essential to counteract this issue.

Bottom Line

Effective machine learning model evaluation is both a strategic and technical necessity. Using techniques such as error analysis, performance benchmarking, and robust evaluation frameworks, businesses can derive actionable insights from their data. With Hyqoo, your organization can accelerate AI implementation, reduce operational inefficiencies, and unlock smart data management capabilities. Whether you’re optimizing ML models or exploring advanced AI solutions, Hyqoo provides expertise to ensure your models consistently outperform benchmarks and achieve deployment success.

FAQ

1. How can human feedback improve machine learning model evaluation?

Human feedback is essential in improving model evaluation. It is especially important for tasks involving natural language understanding or user interactions. Businesses can identify issues like unclear predictions or inappropriate responses by integrating real-world user feedback. This feedback loop helps align model outputs with user expectations. It assists in improving relevance, usability, and all-around accuracy.

2. What is the role of interpretability in model evaluation?

Interpretability is a relevant key to understanding why a model makes specific predictions. Tools like SHAP and LIME help visualize feature importance. It enables businesses to build trust and ensure ethical use of models. This is particularly important in industries like healthcare and finance, where explainability is important for compliance and decision-making.

3. How does data augmentation contribute to effective model evaluation?

Data augmentation enriches model evaluation by creating synthetic data to test edge cases and improve generalization. Techniques like image rotation, noise addition, or oversampling rare classes provide diverse scenarios for evaluation. They promise that models remain robust against variations in input data which leads to better performance on unseen datasets.